Figure: Sophia, the first robot citizen in the Global A.I summit 2018. Sophia is a social robot that claims it can take care of the sick and help in roles involving social interaction (Source: Wikimedia Commons, ITU Pictures)

Singularity, also known as “machine consciousness”, is a concept that marks the threshold for A.I development without human help (Prescott, 2013). If A.I machines achieve singularity, they will be able to make decisions on their own without reliance on new software code input from humans. Current intelligent robots like Sofia are not conscious. Robots such as Sophia are, however, mutable – meaning that they can modify their own code to adapt to their current environment. According to Hanson Robotics (2016), Sophia’s underlying A.I principles can be combined in different, unique ways to give novel responses when faced with a current situation. Therefore, Sophia can sense what to do next based on what its environment is likely to portray. For instance, if Sophia’s operator is sad and initiates a conversation explicitly stating his/her feelings, Sophia is able to give consoling responses (Robotics, 2019).

Ultimately, Sophia achieves this by referring to algorithms in its internal software – code-based recipes for performing specific tasks. In layman’s terms, the algorithm for this specific situation would be something like: ‘Output happiness (a smiling face + consoling words) when your operator is sad’. Having to refer to something already predetermined by code – even though the code is robust enough that it can produce different outputs for a multitude of different situations – ensures that Sophia is unable to truly make decisions on its own. This is safer for people, as the developers have good control over the robot. However, if a conscious machine is to be manufactured, it will be primed to automatically learn on its own without being bound to its initial, programmed code alone (Chella et al., 2019). It could become as hard to predict Sophia’s actions as it is to predict how a human is going to react in any given scenario.

Robot production has advanced exponentially in recent years – and Hanson Robotics, based in Hong Kong, is one company seeking to ramp up mass production of robots by the end of 2021. The company believes that robots and automation can help in the current Covid-19 pandemic by ensuring people’s safety (Hennessy, 2021). Indeed, we have seen automation assisting in key aspects such as the flight automation system. However, substantial programming aspects of that system are immutable. Immutability is the idea that when something is created, it cannot be altered (Parsons, 2020). This means the core functions of these systems such as the autopilot system in planes cannot be programmed to do something else rather than balance altitude and flight path once incorporated into the plane. These systems are categorized as narrow A.I. solutions, where it is impossible to change the function of the system once manufactured.

With increasing technological advancements, many A.I. experts anticipate A.I achieving singularity as soon as 2060 (A.I Multiple, 2017). Prescott (2013) argues that in a worst-case scenario, “homo sapiens might be replaced by intelligent machines as the dominant species of the earth.” At this point, AI may cease to be helpful to humans, and instead be harmful. In their recent paper, Mele & Shepherd (2021) postulated that free will can have negative effects because it encourages implicit attitudes in humans. In his writing, Alfred Mele referenced the Vohs and Schooler experiment about participants taking a test under different conditions.

The experiment conducted by Vohs & Schooler (2008) tested one hundred and twenty-two undergraduates, forty-six females, seventy-five males and one participant who did not specify their gender, on the Graduate Record Examination (GRE) practice test. These participants were randomly designated to five conditions. Of the five conditions, three conditions (“free-will”, “determinism” and “neutral”) encouraged cheating. In these three conditions, participants arrived in the laboratory in groups of two to five and were assigned spaces to take the tests. This sense of anonymity provided a cheating opportunity. The other two conditions, designated “non-cheating”, did not promote cheating as participants were tested individually. In the conditions were cheating was possible, subjects read statements before beginning the aptitude test. The statements in the freewill condition group, for example, primed assertions that participants had capabilities of overriding genetic and environmental factors that influenced their decisions. In the determinism condition, statements asserted that there are powerful lawful governing forces in-charge of their behaviours and ultimately, the environment programs their behaviours. In the neutral situation, the participants read general facts non-concerned with anything to do with behaviour influence. The test involved: Comprehension, mathematical, logic and reasoning problems where participants were to receive a dollar for each problem they solved correctly.

Participants in the free-will, determinism, and neutral conditions completed a free-will determinism scale (FWD) assessment so that it could be determined whether the manipulation statements had implications on their responses to the GRE practice test. Mean; M and standard deviation; SD were used in the analysis and results decoded below: participants in the freewill condition reported stronger beliefs in free will (M 5 23.09, SD 5 6.42) than did participants in the neutral condition (M 5 20.04, SD 5 3.76) (p < .01). participants in the determinism condition reported weaker beliefs in free will (M 5 15.56, SD 5 2.79) than did participants in the neutral condition (p < .01). Furthermore, participants in the determinism condition had higher scores (M 5 23.14, SD 5 2.69) than those in the neutral and free-will conditions (neutral: M 5 20.40, SD 5 3.40; free will: M 5 20.78, SD 5 3.21) (p < .01).

In cheating conditions, participants paid themselves after scoring and shredding their own answer sheets, whereas in the non-cheating conditions, the experimenter paid participants according to their actual performance. To determine cheating behaviour, experimenters compared payments done in the cheating conditioned groups with experimenter-paid groups. In the three self-paid conditions, answers sheets by participants were not available as they had been shredded, so there was a division of the number of dollar coins by the number of members that had been randomly chosen to participate in the condition. An analysis of variance (ANOVA) test was conducted to compare the group averages in amount of money each group made. The difference between them was significant a baseline experimenter-scored and determinism experimenter condition and a significant effect of condition (p < .01). To conclude, participants who had read the determinism statements and who were allowed to pay themselves for correct answers walked away with more money than the others (p < .01).

This implies that with the capacity for free will unbounded by rules often comes the choice to perform unfair actions without limitation. If this automatic trait is inherited by conscious A.I, one can imagine how destructive it would be. In other words, humans are capable of acting in unfair and even dangerous ways – and having another population of sentient beings that share the negative traits of humanity could spell disaster for the human race, especially if AI is able to command a more powerful hold on the world’s resources.

Considering our intelligence as humans is limited and the sophistication of machines growing, there is no possible way to deter machines from achieving singularity if we continue producing them with the aim of achieving consciousness (A.I Multiple, 2017). That being said, the recent shutdown of Facebook A.I robots demonstrates that there is some uncertainty within the industry about continuing to create conscious A.I robots (Kenna, 2017). The aforementioned experiments assessing the psychological concepts of free will and the bystander effect applied to AI suggest that further caution is warranted.

References

- AIMultiple. (2017). 995 expert’s opinion: AGI / singularity by 2060 [2020 update].

- AppliedAI. https://research.aimultiple.com/artificial-general-intelligence-singularity-timing/

- Hennessy, M. (2021). Makers of Sophia the robot plan mass rollout amid pandemic.

- Reuters. https://www.reuters.com/article/us-hongkong-robot-idUSKBN29U03X

- Kenna, S. (2017). Facebook Shuts Down AI Robot After It Creates Its Own Language.

- Huffington Post. https://www.huffingtonpost.com.au/2017/08/02/facebook-shuts-down-ai-robot-

- after-it-creates-its-own-language_a_23058978/

- Mele, A., & Shepherd, J. (2021). Situationism and Agency | Journal of Practical Ethics. Situationism

- and Agency, 655(2051-655X).

- Journal of Practical Ethics.https://www.jpe.ox.ac.uk/papers/situationism-and-agency/

- Parsons, D. (2020). How to write IMMUTABLE code and never get stuck debugging

- again. DEV Community. https://dev.to/dglsparsons/how-to-write-immutable-code-and-never-get-

- stuck-debugging-again-4p1

- Prescott, T. J. (2013). The AI Singularity and Runaway Human Intelligence. Biomimetic and

- Biohybrid Systems, 6084, 438–440. https://doi.org/10.1007/978-3-642-39802-5_59

- Robotics, H. (2016). Sophia – Hanson Robotics. Hanson Robotics.

- https://www.hansonrobotics.com/sophia/

- Chella, A., Cangelosi, A., Metta, G., & Bringsjord, S. (2019). Editorial: Consciousness in

- Humanoid Robots. Frontiers in Robotics and AI, 6. https://doi.org/10.3389/frobt.2019.00017

Related Posts

Prescribing Ecotherapy: A Powerful Health Intervention

This publication is in proud partnership with Project UNITY’s Catalyst Academy 2023...

Read MoreHow Tele-Mental Health Helps Us During the COVID-19 Pandemic

Figure 1: Telehealth consultation via smartphones can serve as a...

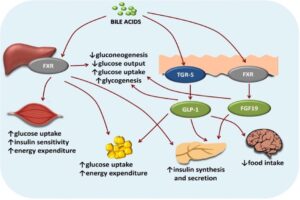

Read MoreBile Acid-Induced Satiety to Treat Obesity

Figure 1: Diagram of the effects of bile acids on...

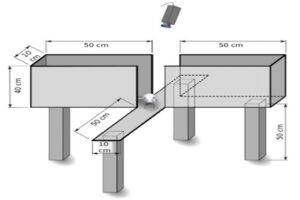

Read MoreThe Effects of Handling of Rats on Anxiety

Figure 1: Elevated Plus Maze used to measure anxiety-like behavior...

Read MoreThe Future of Wearable Devices

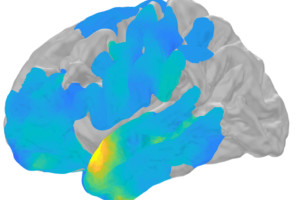

Figure: The colored sections of the brain represent the different...

Read MoreFrankline Misango Oyolo