BigDog robots being tested under the supervision of a Boston Dynamics team member. BigDog is a quadruped robot created in 2005 by Harvard University Concord Field station and the Jet propulsion Laboratory. The Robots are created to assist in Military combat and crime expedition in environments prone to terrorism. The nature of these environments may make it hard for robots to evade criminal attacks. Regular human beings may be willing to help robots when necessary, and scientists want to know what conditions prompt humans to help robots. (Source: Wikimedia commons, U.S Marine corps)

Introduction

The bystander effect is a psychological phenomenon in which the greater the number of people present in a given area, the less likely people are to help an individual in an emergency. The same effect applies when a superior force (e.g., a physically strong individual) is present, as people generally assume that the superior force will intervene. When an emergency occurs, observers are more likely to take action when there are fewer witnesses (Cherry, 2018). This effect extends to other organisms as well, meaning that most organisms are likely to exhibit inaction in crime situations rendering victims unable to receive optimal assistance (Science Times, 2020).

The bystander effect even applies to robots. As robots are recruited to enforce the law in places where human intervention is less safe, such as regions prone to terrorism, robot abuse might spike in many forms (Ackerman, 2020). Robots may be left on their own to protect themselves or assisted by a human being. Though the presence of a regular human being, who may be overpowered by the criminals in the abuse scenario, might further escalate the bystander effect.

The Experiment

To address these questions, A recent study by researchers at Yale University has demonstrated experimentally that robots might be affected by the bystander effect. Connolly et.al (2020) enrolled three Cozmo robots and two participants: one in-person researcher and another in-person volunteer.

Figure 1: Cozmo is a small, programmable robot that can express emotions through its screen, navigate confined spaces, and utter linguistic phrases such as “Hello.” (Source: Flickr, Ars Electronica)

The experiment involved three tasks using wooden blocks of three colors: green, white, and blue. The first task was building a tree using green blocks, the second task was building a fort using white blocks, and the third task was building a swimming pool using blue blocks. One of the robots, labeled yellow, was selected to do all three tasks. The other two robots, labeled green and blue, were selected as bystanders. However, the yellow robot had a modified script to ensure it made errors while working on each task. These errors would then lead to the escalation of four types of abuse by the researcher:

- Abuse 1– Pushing the robot down after picking a blue wooden block in the tree building task

- Abuse 2– Shaking the robot after dropping a white wooden block in the fort building task.

- Abuse 3– Repeatedly expressing “stop coming over here” after knocking white wooden blocks in the fort building task.

- Abuse 4– Throwing the robot forcefully across the table after knocking down blue blocks in the pool building task

The experiment had two alternative conditions: either the yellow robot displayed a sad face indicating abuse (the ‘sad response’ condition) or showed no response at all (the ‘no response’ condition). In the Sad Response condition, the green and blue bystander robots turned towards the yellow robot and expressed sadness using preset animations. In the no-response condition, no intervention was recorded.

Essentially, the goal of the experiment was to see whether expressions of emotion by the bystander robots influenced the human volunteer to intervene pro-socially. This could mean helping the robot up after the researcher pushed it down or threw it across the table, or simply telling the researcher to stop abusing the robot.

Using an approach called Poisson regression analysis, the researchers determined statistically that there were significantly more pro-social interventions by the volunteer in the Sad Response condition (Count = 11, Estimate = 1.29, SE = 0.65, z = 1.9, p = 0.046) than in No Response (Count = 3). In other words, human bystanders are more likely to help a robot in distress when they see fellow robots saddened by the abused robot’s fate.

Future implications and improvements

The results of this study indicate how the bystander effect may be counteracted by careful programming and use of robots – sending out a posse of robots that are human-like in the emotions they display (i.e., capable of displaying sadness when their fellow robot is abused) may encourage humans to intervene and protect robots that are doing an important job.

The results can be improved by testing group interactions presented with other emotions such as anger and fear. Furthermore, it could be refined by varying robots, changing the experimental setup to a more contextual crime environment, and increasing the number of robots.

Law enforcement firms that plan to rely on robots should make sure their robots are sent in large groups to counteract the abuses. Furthermore, the robots should be sent under adequate ground supervision to easily receive help from the force. The robots can even be trained to recognize and respond to human forces themselves, making them less reliant on regular citizens who may be deficient in mitigation of the abuse.

References

Ackerman, E. (2020, August 19). Can Robots Keep Humans from Abusing Other Robots? IEEE Spectrum. https://spectrum.ieee.org/can-robots-keep-humans-from-abusing-other-robots

Cherry, K. (2018). Why Bystanders Sometimes Fail to Help. Verywell Mind. https://www.verywellmind.com/the-bystander-effect-2795899

Connolly, J., Mocz, V., Salomons, N., Valdez, J., Tsoi, N., Scassellati, B., & Vázquez, M. (2020). Prompting Prosocial Human Interventions in Response to Robot Mistreatment. Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, 978-1-4503-6746-2/20/03. https://doi.org/10.1145/3319502.3374781

P, E. (2020, July 8). “Bystander Effect” Also Applies to Other Animals: A Phenomenon Not Exclusive To Humans. Science Times. https://www.sciencetimes.com/articles/26380/20200708/bystander-effect-also-applies-to-other-animals-a-phenomenon-not-exclusive-to-humans.htm#:~:text=Humans%20%7C%20Science%20Times-

Strickland, J. (2010, March 22). Could computers and robots become conscious? If so, what happens then? HowStuffWorks. https://science.howstuffworks.com/robot-computer-conscious.htm

Related Posts

The Effects of Friends and Family on Daily Stress

Figure 1: This figure shows several of the different effects...

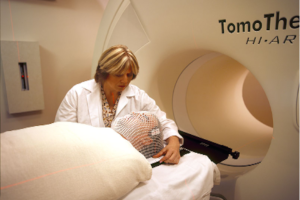

Read MoreUnderstanding the Social Factors Affecting Cancer Therapy

Cover Image: A patient being prepared for radiation therapy. (Source:...

Read MoreConcierge Medicine Could Reduce Senior Health Inequities in Illinois

This publication is in proud partnership with Project UNITY’s Catalyst Academy 2023...

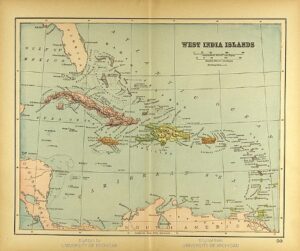

Read MoreCaribbean History through Genetics and Archaeology

Figure 1: A map of the Caribbean Islands from 1894...

Read MoreCOVID-19 and its Implications for Adolescent Mental Health

Covid 19 and Isolation (Source: created by author) Abstract The...

Read MoreThe Public Health Crisis of Alzheimer’s Disease in African American and Hispanic Populations

This publication is in proud partnership with Project UNITY’s Catalyst...

Read MoreFrankline Misango